Explore Our Latest Insights on Artificial Intelligence (AI). Learn More.

AI Security Risks and Recommendations: Demystifying the AI Box of Magic

by Alex Cowperthwaite, Pratik Amin

Fri, Apr 12, 2024

Explore Our Latest Insights on Artificial Intelligence (AI). Learn More.

It's undeniable that 2024 will be a pivotal year for companies seeking to adapt to the challenges and opportunities posed by artificial intelligence (AI). AI made enormous gains in 2023, which proved to be a turbulent year with significant advances and frequent announcements, publications and new products. We expect this growth curve will continue on a similar trajectory in the year ahead.

Keeping up with around 4,000 new publications per month discussing techniques, breakthroughs and pitfalls, especially in the field of generative AI, may already be an obsession for some members of your organization. However, even the most dedicated colleagues may lack the bandwidth to identify the best path and assimilate the swift development of capabilities, along with their shadow side. Another complexity is that academics devoted to this space disagree strongly about the best way to set policy around AI. Some organizations have clearly defined prohibitions against using AI for company projects while others have already established aggressive AI programs.

This article is the first in a series aimed at outlining key considerations for developing an AI business strategy. Future articles will delve into the various components of AI systems, including types of models, business requirements, effective use cases, pitfalls and other critical issues.

Imagine you write for a living, for example, as a lawyer, consultant, journalist or marketing executive, or perhaps, you are a graphic designer or visual artist. A new hire joins your organization, thrilled to be entering the workforce. This colleague willingly agrees to write all of your first drafts for you, or generate pictures for you, and takes your feedback for refinements over and over at no cost for as long as it takes to get it right. Although this new resource has not worked in your field for long, they have gone to graduate school in nearly every subject and have read almost everything.

Many workers would be thrilled that, in theory, they never again need to start from nothing when they generate a first draft. The new worker can learn and create from any voice and any visual style. Some will grow concerned that this new resource is so impressive that it puts their own job at risk over the medium-term. Still others will predict that job losses will be just the beginning, and that this new resource is able to learn on a curve that is so steep that it will become everyone’s boss in a short amount of time. This is a simple analogy and doesn’t paint the depths of changes likely to touch nearly every sector of the economy, from medicine, to logistics, to law, to media, and on and on.

Some companies are already able to quantify the financial and productivity savings from AI tools officially endorsed by the business, and the gains are considerable, even at this early stage. For example, Kroll Cyber Risk works extensively with event logs in PowerShell. We trained an ML model to classify as “obfuscated” or “not obfuscated.” An obfuscated script will bypass signature, whereas for events seen in plain text, antivirus signatures will work. If Kroll has to analyze a large number of a certain type of event log known as a 4104 event to distinguish between the two, and that takes dozens of hours, what is the productivity gain of using a machine learning model to flag aspects such as obfuscated text for an investigation? We undoubtedly saved dozens of hours that would otherwise have been required for simply locating the logs which called for a full analysis.

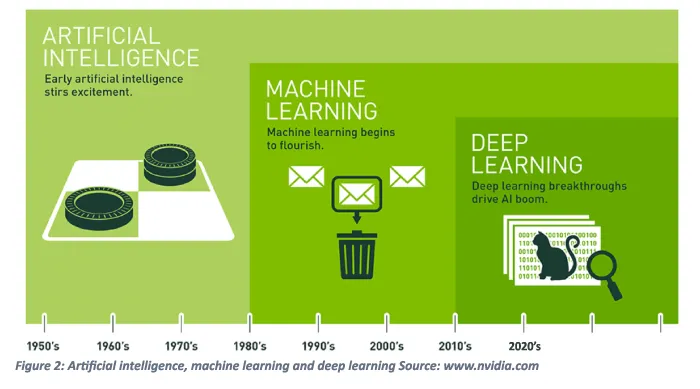

Artificial intelligence is the development of intelligence using computer algorithms. AI systems are built on modeling techniques. These modeling techniques are grouped by how we “train” them.

Machine learning includes the following categories:

Generative AI, which utilizes the above training methods in varying degrees depending on the request, generates texts from inputs (called “prompts”) and images from text, or videos from text and/or image prompts. Large language models such as ChatGPT, and Bard, are generative AI. Image and video generation services such as DALL-E, MidJourney, Pika Labs and Stable Diffusion are also well-known generative AI products. Many people think of these generative AI functions as the essence of AI, but there are several other forms, including a large subset known as machine learning, and another subset of machine learning known as deep learning. Some, but not all, deep learning applications are generative.

We owe the now-mainstream adoption and awareness of AI to breakthroughs in deep learning, which began in earnest in the 2010s when a group of scientists followed an improbable thread involving the development of “neural nets” that roughly simulate neurons in the human brain.

A seminal paper released by Google in 2017 entitled Attention is All you Need introduced transformers. Some people assume that the term “transformer” refers to hardware, but transformer architectures are built from equations. The magic of generative AI is all mathematical, mainly linear algebra. The hardware components and configurations are massive but basic. While we would love to go on discussing AI’s fascinating history, architectures, breakthroughs and capabilities, this will have to wait for future articles. For the interested reader, we offer three recommendations for introductory research:

For a deeper dive, we highly recommend:

The best answer to this question is a short series of additional questions: what do you want AI to do for your organization? What is the problem set you are looking to solve? What is the value of having those problems solved by AI instead of your existing personnel? It may well be that several smaller models and deployments, separately trained for specific uses, will be better than a single AI tool. We believe that companies that use modularity by building small sets of AI models that can be trained individually with local data will outperform companies relying on a single principal language model for all employee consumers.

To deploy AI at your organization, you will need to consider cost, security, personnel, compatibility, competitive position, and ethical and regulatory dilemmas as you weigh up your options. An AI business strategy helps you to understand and handle these difficult questions. These factors will all influence the best path forward for your organization. A robust AI strategy successfully navigates a decision tree and includes cost/benefit analysis together with risks and countermeasures.

It is our belief that most consumer-facing and business-facing organizations would be well-advised to adapt some forms of AI to enhance productivity, increase equity value and, quite simply, to avoid missing out. But the precise technical footprint of the AI tools deployed should be carefully tailored to specific problem sets; in other words, companies should carefully select problems to ensure that the issue and the desired outcome can be readily understood. Companies should decide whether the AI tool will be strictly for employee use or public facing. Companies also need to ensure that they understand the risk that could arise from their use of AI. Many risks stem from biases that exist in the data, producing outputs that could, in turn, lead to business decisions that are out of alignment with the company’s ethical principles, creating litigation risks.

The main reasons to develop an AI business strategy stem from the fact that it is the leader of all disruptive technology, perhaps the most disruptive technology ever developed to date. Accordingly, it’s likely to be a make-or-break decision even for companies that don’t recognize this yet. A carefully considered strategy will allow you to either gain or maintain a competitive advantage. It can also accelerate adoption from your user-base, as well as bringing cost certainty to your organizational use of AI. Most importantly, it should enable you to manage downside risk. In addition to biased model outputs, some of the commonly discussed areas of risk for businesses using AI for internal productivity include:

For companies interested in deploying generative AI, one of the first decisions to explore is the choice between commercial large language models (LLMs) and open-source (often referred to as “open weight”) LLMs that are fine-tuned internally.

OpenAI’s ChatGPT is the largest and most sophisticated of the so-called frontier model commercial LLMs. Others not far behind, if at all, include Anthropic’s Claude Opus 3, Google Gemini, Perplexity AI, and Aleph Alpha.

Open weight models include Meta’s Llama 2 and Mistral’s Mixtral 8x7B.

Some organizations benefit from a hybrid mix of commercial LLMs and open weight LLMs. Open weight models have the advantage of being deployable behind a company’s privately hosted firewall and can be trained using highly sensitive information without the risk of accidental disclosure. Commercial LLMs often offer enterprises the chance to deploy models in local, privately hosted environments. Companies using cloud-hosted commercial LLM solutions are generally using less sensitive data or paying for local deployments for sensitive data, which can be expensive.

Many companies process, manage and/or retain sensitive data. Because we strongly advise clients to avoid exposing sensitive data to train or prompt commercial LLM platforms, many or most enterprises will need to develop internal models. In some cases, routing mechanisms may exist to assist the enterprise in sending sensitive data to internal models and non-sensitive data to less restrictive LLM platforms. Commercial cloud-hosted language models from the major players do offer robust security, but many companies work with data that is especially sensitive and may choose to keep this data on premises by using only open-source models that they can fine-tune. Open-source models can also be customized with greater flexibility to meet the specific automation needs of particular segments of the business. These types of models require a higher initial investment due to the costs of expertise and infrastructure but can be highly cost-effective in the medium- to long-term, as clients can avoid recurring and per-use costs.

One obvious consideration with using a commercial cloud-hosted solution such as ChatGPT is that it requires an internet connection. However, these tools offer companies the fastest path to AI and in many ways are the easiest to use, as well as offering the most cutting-edge technology. Particularly, in the short term, these types of tools are the most cost-effective and scalable options. Employees will already be familiar with them and may have direct experience of using them for non-work applications. Commercial cloud-hosted LLMs now offer onramps that are private, either through locally hosted models located inside the company firewall, or as add-ons through services such as Azure, Salesforce or Databricks that the company may already be using.

Some Kroll clients are taking advantage of licenses with commercial LLMs that allow them to run models locally on their own infrastructure, rather than relying on cloud-based services.

OpenAI has recently announced a new deployment option for their GPT models, which allows customers to run the models on their own hardware, within their own firewall. This option is designed for customers with specific security, privacy or regulatory requirements that may prevent them from using cloud-based services. Similarly, Anthropic has announced that they are offering on-premises deployment options for their large language models. This allows customers to run the models on their own infrastructure, giving them more control over their data and greater flexibility in integrating the models into their existing systems. Aleph Alpha specializes in allowing companies to utilize high-sensitivity data in AI and works with government agencies and defense contractors.

It's worth noting that these local deployment options may require additional technical expertise and resources to set up and maintain, but they offer a way for organizations to leverage the power of large language models while maintaining greater control over their data and systems.

Both Microsoft and Salesforce offer a range of products and services that leverage AI and can be easily integrated into businesses through existing licenses. Microsoft Copilot is a suite of AI-powered tools that can be integrated into various Microsoft products, including Office, Dynamics and Azure. With an existing license for these products, businesses can access features like natural language processing, machine learning and predictive analytics. For example, Microsoft Copilot in Office can help users generate meeting notes, write emails and create presentations more efficiently. In Dynamics, it can provide sales and customer service teams with real-time insights and recommendations based on customer data. In Azure, it can help developers build and deploy AI models more quickly and effectively.

Salesforce Einstein is an AI platform that can be integrated into various Salesforce products, including Sales Cloud, Service Cloud and Marketing Cloud. With an existing license for these products, businesses can access features like predictive analytics, intelligent process automation and personalized customer experiences. For example, Salesforce Einstein in Sales Cloud can help sales teams predict customer behavior, identify the most promising leads and automate routine tasks. In Service Cloud, it can provide service teams with intelligent case routing, chatbots, and automatic recommendations for resolving issues. In Marketing Cloud, it can help marketers deliver personalized messaging and offers to customers based on their behavior and preferences. By leveraging these existing commercial licenses, businesses can quickly and easily integrate AI into their operations, improving efficiency, driving insights and enhancing customer experiences.

Understanding your data is critical because AI is 95% data, 5% algorithm. Key questions to ask around data include: Is some of your data subject to privacy regulations? Is it in a place where you can use it without having to move it (which is expensive)?

Preparing and managing data for machine learning is a critical step that will dramatically improve your chance of building your competitive advantage with an AI program. The first step in preparing data for machine learning is to ensure that it is collected and stored appropriately. Companies should have the necessary infrastructure and tools in place to collect the data they need, whether that’s through manual entry, data imports or API integrations. The data should be stored in a secure, centralized and, hopefully, permanent location that can be easily accessed by a machine learning team.

The processes of data cleaning and pre-processing are essentially protocols to confirm that the data is accurate, consistent and suitable for machine learning. These protocols include correcting errors, removing duplicates, standardizing formats and filling in missing values. Data pre-processing may also include feature engineering, where new features are created, or existing features are transformed to improve the model's performance.

Efforts to label and annotate the data appropriately may involve categorizing it into classes and annotating data with relevant information, such as the location of objects in an image or the sentiment of a text document. Although labeling and annotation are often done manually, they can also be undertaken with automation in some cases; the suitability of automated tools depends on the type and volume of data.

A crucial final step in designing a machine learning program as part of your AI business strategy is to split the data into training, validation and test sets to evaluate a chosen model's performance and avoid overfitting, in which the model works flawlessly with the training data but not for new data. The training set is used to train the model, the validation set is used to tune the model's hyperparameters, and the test set is used to evaluate the model's performance on unseen data. Data should be divided into these categories randomly and representatively to avoid bias and ensure that the model generalizes well to the real world.

As part of a broader AI business strategy, deploying AI for employee or customer use will require the guardrails of an AI policy. We will look at this in detail in a future article. At a minimum, product documentation, user guidance and rule setting around safe use of the tool(s), frequently asked questions, and training materials will be important aspects of your policy documentation.

As mentioned earlier, some Kroll clients have set definitive prohibitions against using AI for company work while others already have aggressive AI programs in place. Companies who determine that an AI investment makes sense, manage the downside risk, prepare and label their proprietary data, solicit input from the widest possible group of users, use modularity to tailor machine learning applications to concrete problems, and create solid policy documentation, will undoubtedly fare best. At this critical stage of the first AI wave, the best, and probably the only path is through strategic and informed trial and error, and in this regard, nearly all companies are in the same boat. This involves working with specialist partners with proven real-world experience of enabling organizations to manage and mitigate the potential risks of AI. Kroll reduces the risks of responding swiftly to new commercial opportunities through services such as security validation and assessments, security advisory services and digital risk protection.

Incident response, digital forensics, breach notification, security strategy, managed security services, discovery solutions, security transformation.

Kroll’s Malware Analysis and Reverse Engineering team draws from decades of private and public-sector experience, across all industries, to deliver actionable findings through in-depth technical analysis of benign and malicious code.

Threat intelligence are fueled by frontline incident response intel and elite analysts to effectively hunt and respond to threats.

AI is a rapidly evolving field and Kroll is focused on advancing the AI security testing approach for large language models (LLM) and, more broadly, AI and ML.

by Alex Cowperthwaite, Pratik Amin

by Mark Turner, Richard Taylor, Richard Kerr

by Nicole Sette, Joe Contino

by Ana D. Petrovic, Jack Thomas, Justin Hearon